Introduction to Kolmogorov–Arnold Networks (KANs)

In the rapidly evolving world of artificial intelligence, neural networks stand as cornerstones, powering countless applications from image recognition to natural language processing. Among these, Multi-Layer Perceptrons (MLPs) have been fundamental and known for their robustness and versatility in modeling complex, nonlinear functions. Traditionally, MLPs are celebrated for their expressive power, primarily attributed to the Universal Approximation Theorem which suggests that they can model any continuous function under certain conditions. However, despite their widespread adoption, MLPs come with inherent limitations, particularly in terms of parameter efficiency and interpretability.

Enter Kolmogorov–Arnold Networks (KANs), a groundbreaking alternative inspired by the Kolmogorov-Arnold representation theorem. This new class of neural networks proposes a shift from the fixed activation functions of MLPs to adaptable activation functions on the connections between nodes, offering a fresh perspective on network design. Unlike traditional MLPs that utilize a static architecture of weights and biases, KANs introduce a dynamic framework where each connection weight is replaced by a learnable univariate function, typically parameterized as a spline. This subtle yet profound modification enhances the model’s flexibility and significantly reduces the complexity and number of parameters required.

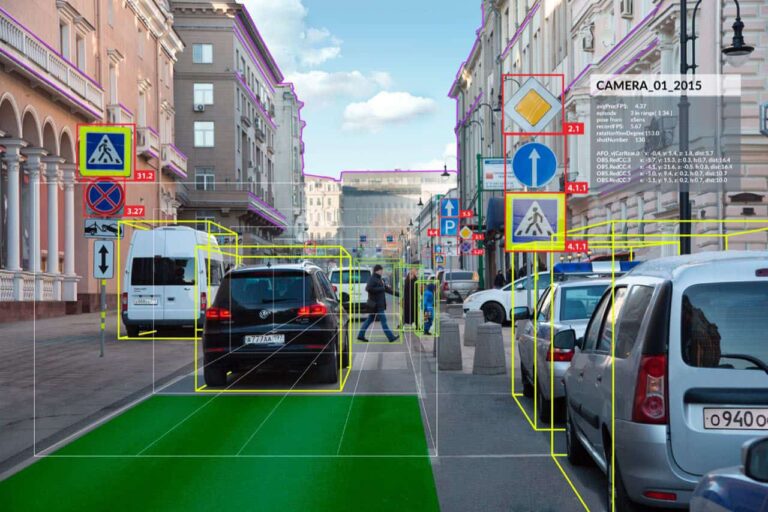

The introduction of KANs marks a pivotal shift in neural network architecture, promising not just improvements in accuracy and efficiency, but also a leap towards models that are inherently more interpretable. This interpretability is crucial for applications requiring transparency in decision-making processes, such as in medical diagnostics and autonomous driving. Moreover, the flexibility of KANs opens up new possibilities for machine learning models, making them more adaptable and potentially more powerful in handling complex datasets and problems.

As we delve deeper into this article, we will explore the intricacies of KANs, compare their performance to traditional MLPs, and discuss their potential to not only enhance existing applications but also to pioneer new avenues in scientific research and machine learning. The promise of KANs is not just in their theoretical novelty, but in their potential to fundamentally change how we think about, design, and utilize neural networks in the pursuit of artificial intelligence.

Understanding Kolmogorov–Arnold Networks (KANs)

The genesis of Kolmogorov–Arnold Networks (KANs) is deeply rooted in the Kolmogorov-Arnold representation theorem, a seminal concept in mathematical theory that profoundly influences their design and functionality. This theorem provides a method to express any multivariate continuous function as a superposition of continuous functions of one variable. Inspired by this theorem, KANs are crafted to leverage this foundational mathematical insight, thereby reimagining the structure and capabilities of neural networks.

Theoretical Foundation

Unlike Multi-Layer Perceptrons (MLPs) that are primarily inspired by the Universal Approximation Theorem, KANs draw from the Kolmogorov-Arnold representation theorem. This theorem asserts that any function of several variables can be represented as a composition of functions of one variable and the addition operation. KANs operationalize this theorem by implementing a neural architecture where the traditional linear weight matrices and fixed activation functions are replaced with dynamic, learnable univariate functions along each connection, or “edge”, between nodes in the network.

Architectural Shifts

The most distinctive feature of KANs compared to traditional MLPs is the placement of activation functions. While MLPs apply fixed activation functions at the nodes (neurons) of the network:

- MLP(x) = (W3 ∘ σ2 ∘ W2 ∘ σ1 ∘ W1)(x)

KANs instead place learnable activation functions on the edges (weights), eliminating linear weights entirely:

- KAN(x) = (Φ3 ∘ Φ2 ∘ Φ1)(x)

Here, each Φ represents a learnable function, typically parameterized as a spline, that directly modifies the signal transmitted between layers. This architecture not only simplifies the computation graph but also enhances the network’s ability to model complex patterns through more direct manipulation of data flow.

Advantages Over Traditional MLPs

The reconfiguration of activation functions and elimination of linear weight matrices result in several key advantages:

- Parameter Efficiency: Each weight in an MLP is replaced by a spline function in KANs, which can adapt its shape based on the learning process. This adaptability often allows KANs to achieve high accuracy with significantly fewer parameters compared to MLPs.

- Flexibility and Adaptability: By employing splines, KANs can more finely tune their responses to the input data, offering a more nuanced adaptation to complex data patterns than the relatively rigid structure of MLPs.

- Interpretability: The structure of KANs facilitates a clearer understanding of how inputs are transformed through the network. Each spline function’s effect on the data is more observable and understandable than the often opaque transformations in deep MLPs.

Visual Comparison

Illustratively, while MLPs rely on a combination of weight matrices and non-linear activation functions applied in a fixed sequence, KANs create a fluid network of functions that dynamically adjust based on the data. This difference is not just architectural but conceptual, pushing forward the boundaries of what neural networks can learn and represent.

In summary, KANs represent a novel and promising evolution in neural network design. They offer a compelling alternative to MLPs by integrating the flexibility of function-based learning with the robustness of traditional neural network structures. This blend of mathematical rigor and practical design sets the stage for a deeper exploration of their performance and applications, as discussed in the following sections.

Advantages of KANs Over Traditional MLPs

Kolmogorov–Arnold Networks (KANs) offer several significant advantages over traditional Multi-Layer Perceptrons (MLPs), particularly in terms of model efficiency, accuracy, and interpretability. This section delves into these benefits, highlighting the innovative aspects of KANs that potentially revolutionize neural network applications.

Enhanced Accuracy and Efficiency

One of the standout features of KANs is their ability to achieve higher accuracy with fewer parameters compared to MLPs. This advantage is underpinned by the unique architectural elements of KANs that allow for a more direct and flexible manipulation of input data through learnable activation functions on each edge of the network.

- Reduced Model Complexity: By replacing the typical weight matrices in MLPs with spline-based functions that act on edges, KANs dramatically reduce the number of parameters. This reduction in complexity often leads to more efficient training processes and faster convergence rates.

- High Precision in Data Fitting and PDE Solving: KANs have demonstrated superior performance in complex tasks such as data fitting and solving partial differential equations (PDEs). For instance, in applications requiring high precision, such as numerical simulation and predictive modeling, KANs have outperformed MLPs by orders of magnitude in both accuracy and computational efficiency.

Improved Interpretability

Interpretability is a critical aspect in many AI applications, particularly in fields like healthcare, finance, and autonomous systems where understanding model decisions is crucial. KANs inherently offer better interpretability due to their structural advantages:

- Visual Clarity of Function Transformations: The use of spline functions allows for a clear visual interpretation of how inputs are transformed through the network. Unlike MLPs, where the transformation through layers can be opaque, KANs provide a more transparent view of the data flow and transformation.

- Ease of Modification and Interaction: The functional approach of KANs not only simplifies the understanding of each layer’s impact but also allows easier modifications to meet specific needs or constraints, facilitating user interaction and customization.

Theoretical and Empirical Validation

The theoretical foundations of KANs provide robustness to their design, which is empirically validated through extensive testing and application.

- Neural Scaling Laws: Theoretically, KANs exhibit more favorable neural scaling laws than MLPs. This implies that as the network scales, KANs maintain or improve performance more effectively than MLPs, particularly in environments with large-scale data.

- Empirical Studies: Across various studies, KANs have shown to not only perform better in standard tasks but also in discovering underlying patterns and laws in scientific data, demonstrating their utility as tools for scientific discovery.

Case Studies

Several case studies illustrate the practical benefits of KANs over MLPs:

- In mathematical applications, such as symbolic regression or complex function approximation, KANs have successfully identified and modeled intricate patterns that were challenging for traditional MLPs.

- In physics and engineering, KANs have been applied to model and solve intricate problems, from fluid dynamics simulations to structural optimization, with greater accuracy and fewer computational resources than equivalent MLP models.

Overall, Kolmogorov–Arnold Networks represent a significant step forward in neural network technology. Their ability to combine reduced model complexity with enhanced interpretability and superior performance makes them a compelling choice for both current and future AI applications. The next section will delve into specific use cases and potential impacts of KANs on scientific research and industry applications, further highlighting their transformative potential.

Empirical Performance and Theoretical Insights

olmogorov–Arnold Networks (KANs) are not only theoretically innovative but also demonstrate superior empirical performance across a range of applications. This section explores the empirical evidence supporting the effectiveness of KANs, alongside the theoretical insights that underpin their advanced capabilities.

Demonstrated Superiority in Diverse Applications

KANs have been applied successfully in a variety of domains, showing remarkable improvements over traditional MLPs. Here are a few notable examples:

- Data Fitting: KANs have shown the ability to fit complex data sets with high accuracy and fewer parameters. For example, in tasks involving the fitting of non-linear functions, KANs have outperformed MLPs by achieving lower mean squared errors with significantly reduced model complexity.

- Solving Partial Differential Equations (PDEs): In the realm of computational physics and engineering, KANs have proven especially potent. They have solved PDEs with greater precision and efficiency, often requiring smaller computational graphs compared to MLPs which translates into faster computation and less resource consumption.

Empirical Validation through Case Studies

Specific case studies underscore the practical advantages of KANs:

- Scientific Discovery: In fields like physics and chemistry, KANs have helped researchers uncover underlying physical laws and chemical properties from experimental data, acting almost as collaborative tools in the scientific discovery process.

- Machine Learning and AI: In more traditional machine learning tasks, such as image and speech recognition, KANs have demonstrated their ability to learn more effective representations with fewer training iterations, facilitating faster and more scalable AI solutions.

Theoretical Advancements

The theoretical framework of KANs offers insights into why these networks perform effectively:

- Neural Scaling Laws: KANs benefit from favorable neural scaling laws, which suggest that their performance improves consistently as network size increases, without the diminishing returns often observed in MLPs.

- Function Approximation Capabilities: The structure of KANs inherently supports a more flexible function approximation capability, which can be attributed to their use of spline-based activation functions. This flexibility allows KANs to model a wider range of functions directly compared to the layered linear transformations in MLPs.

Improvements in Training Dynamics

The training process of KANs also exhibits several improvements over traditional approaches:

- Efficiency in Learning: KANs typically require fewer epochs to converge to optimal solutions, largely due to their efficient use of parameters and the direct method of function approximation.

- Stability and Generalization: KANs have shown greater stability during training and superior generalization capabilities on unseen data, likely due to their inherent regularization effects from spline functions.

Quantitative Comparisons

Quantitative metrics further validate the superiority of KANs over MLPs:

- Accuracy and Parameter Efficiency: Studies have quantified the accuracy gains and parameter efficiency of KANs across various benchmarks, showing that KANs can achieve similar or better performance with up to an order of magnitude fewer parameters.

- Computational Overheads: Despite the complexity of their individual components, KANs often require less computational overhead due to their streamlined architecture, which eliminates the need for extensive matrix operations that are typical in MLPs.

The empirical and theoretical insights presented in this section highlight the significant advantages of KANs, confirming their potential to transform the landscape of neural network architectures. Their enhanced accuracy, efficiency, and adaptability make them suitable for a wide range of applications, from scientific research to real-world AI tasks. The next section will delve into potential applications and the broader impact of KANs, exploring how they can be integrated into existing systems and what new capabilities they enable.

Potential Applications and Impact on Science

Kolmogorov–Arnold Networks (KANs) hold immense potential to revolutionize various fields of science and technology. This section discusses the broad applications of KANs and their potential to facilitate significant advances in research and industry.

Enhancing Scientific Research

KANs can serve as powerful tools in the arsenal of scientific research, providing new methodologies for data analysis and model creation:

- Physics and Astronomy: In fields that often deal with complex mathematical models and large datasets, such as physics and astronomy, KANs can help simulate and understand phenomena that are otherwise computationally expensive or difficult to model with traditional methods.

- Chemistry and Material Science: KANs can predict the properties of new materials or chemical reactions by learning from known data, potentially accelerating the discovery of new materials and drugs.

Advancing Machine Learning and Artificial Intelligence

The flexibility and efficiency of KANs make them especially suitable for enhancing machine learning and AI applications:

- Deep Learning Enhancements: By integrating KANs into existing deep learning architectures, researchers can create more efficient and interpretable models for tasks like image recognition, natural language processing, and more.

- Robust AI Systems: The inherent interpretability and efficient data handling of KANs contribute to building more robust and reliable AI systems, particularly in critical applications such as autonomous driving and medical diagnosis.

Industry Applications

The unique characteristics of KANs translate into practical benefits for various industries:

- Finance and Economics: In the finance sector, KANs can improve risk assessment models and algorithmic trading by providing more accurate predictions and analyses of market data.

- Energy Sector: KANs can be used to optimize energy usage and predict system failures in power grids, potentially enhancing the efficiency and reliability of energy distribution.

Technological Innovations

KANs also drive technological innovation by enabling new functionalities and improving existing technologies:

- Internet of Things (IoT) and Edge Computing: With their low computational overhead, KANs are ideal for deployment in IoT devices and edge computing scenarios, where processing power is limited.

- Augmented and Virtual Reality: KANs can improve real-time processing for AR and VR applications, enhancing user experience by providing faster and more accurate responses.

Educational and Collaborative Tools

Beyond practical applications, KANs can serve as educational tools, helping students and researchers understand complex systems and models through visualization and interaction:

- Interactive Learning Platforms: By incorporating KANs into educational software, educators can offer students a more interactive and engaging way to learn about complex mathematical concepts and computational models.

- Collaborative Research Tools: KANs can facilitate collaborative research efforts by providing a common platform that enhances the interpretability and usability of shared models.

The potential applications of Kolmogorov–Arnold Networks extend across scientific disciplines, industries, and technologies, positioning them as a transformative force in the advancement of both theoretical and applied sciences. As we continue to explore the capabilities and refine the architecture of KANs, their impact on various sectors is expected to grow, heralding a new era of innovation and discovery in the field of artificial intelligence. The final section will discuss future directions for KAN research and development, underscoring the ongoing need for exploration and adaptation in this exciting area.

Concluding Remarks and Future Prospects

As we have explored the capabilities and advantages of Kolmogorov–Arnold Networks (KANs) throughout this article, it is evident that KANs offer transformative potential for both theoretical research and practical applications. This final section provides concluding thoughts on the current state of KAN research and outlines future prospects for their development and implementation.

Strengthening Theoretical Foundations

While KANs are grounded in the robust mathematical framework of the Kolmogorov-Arnold representation theorem, ongoing research is crucial to uncover deeper theoretical insights:

- Complexity and Optimization: Further studies are needed to understand the computational complexity of KANs better and to develop more efficient algorithms for their training and optimization.

- Expanding Theoretical Models: Extending the theoretical models to incorporate recent advances in statistical learning theory could provide a more comprehensive understanding of the learning dynamics and capacity of KANs.

Enhancing Technological Implementation

Technological advancements will play a critical role in realizing the full potential of KANs:

- Integration with Existing Technologies: Integrating KANs with current machine learning frameworks and data processing pipelines can enhance their applicability across various sectors, including technology, finance, and healthcare.

- Improving Scalability and Efficiency: Addressing scalability and efficiency challenges will be vital for deploying KANs in large-scale applications, ensuring they can handle vast amounts of data and complex problem-solving scenarios efficiently.

Expanding Application Horizons

The versatility of KANs suggests they could be pivotal in numerous domains, but broader application horizons need to be explored and validated:

- Interdisciplinary Research: Leveraging KANs for interdisciplinary research could lead to breakthroughs in areas such as bioinformatics, quantum computing, and complex systems modeling.

- Industry-specific Solutions: Developing customized solutions for specific industries, such as predictive maintenance in manufacturing, personalized medicine, and real-time analytics in financial services, could demonstrate the practical value of KANs and encourage wider adoption.

Addressing Ethical and Societal Implications

As with any advanced AI technology, the deployment of KANs involves careful consideration of ethical and societal implications:

- Ethical AI Frameworks: Developing frameworks to ensure ethical considerations are integrated into KAN deployment, focusing on transparency, fairness, and accountability.

- Public Awareness and Education: Engaging with the public and policymakers to educate them about the benefits and limitations of KANs, fostering informed discussions about their use in sensitive and impactful areas.

Collaborative Ventures and Community Building

The success of KANs will also depend on the collaborative efforts within the scientific community and between academia and industry:

- Open Innovation and Collaboration: Encouraging open innovation through shared datasets, open-source software, and collaborative research projects can accelerate advancements in KAN technology.

- Building a KAN-focused Community: Establishing a global community of researchers, practitioners, and educators dedicated to KANs can foster a collaborative environment for sharing knowledge, best practices, and innovative applications.

Kolmogorov–Arnold Networks represent a promising paradigm shift in neural network architecture, with the potential to impact numerous scientific and practical fields profoundly. As we move forward, the continued exploration, development, and ethical deployment of KANs will be crucial in harnessing their full potential and ensuring they contribute positively to society. The journey of KANs is just beginning, and their future prospects are as exciting as they are boundless.

Conclusion

As we conclude this exploration of Kolmogorov–Arnold Networks (KANs), it’s clear that KANs represent a significant advancement in the field of neural networks, offering a novel approach that challenges traditional paradigms and opens new avenues for research and application. With their unique architecture based on the principles of the Kolmogorov-Arnold representation theorem, KANs provide a robust framework for tackling complex computational tasks with enhanced efficiency, accuracy, and interpretability.

KANs have demonstrated substantial benefits over traditional Multi-Layer Perceptrons (MLPs), including superior performance in data fitting, problem-solving in physics and engineering, and potential for scientific discovery. The flexibility in their design allows for significant reductions in parameter count while maintaining or even enhancing model performance. Moreover, the inherent interpretability of KANs offers a clearer understanding of model decisions, which is crucial for applications in fields where transparency is essential.